GA4 in BigQuery Cost Calculator

Wanna know how much it costs to run BigQuery?

No more guesswork!

Enter your data to estimate your costs

Total Cost Estimate

Storage Cost

Consider changing to Physical Bytes Storage Billing in "advanced settings".

Details

Click "Calculate" newly added GB / mo.

Click "Calculate" total GB

$Click "Calculate" / GB / mo. on average

Click "Calculate" months of long term storage

Click "Calculate" months of short term storage

$Click "Calculate" streaming cost / mo.

The Compute Cost depends on how often the data is queried.

If the data is not queried, the compute cost is $0.

Queries can come from:

- Queries in the BigQuery web console

- Connecting a BigQuery data source to Looker Studio (or another BI tool)

- Processing the data on schedule (DBT or “scheduled queries” or Google DataForm)

Compute Cost

$Click "Calculate" (?? TB) estimated in-console queries / mo.

$Click "Calculate" (?? TB) estimated cost in BI tool queries / mo.

$Click "Calculate" (?? TB) estimated cost in scheduled processing / mo.

Details, Tips, and Tricks

Billing Storage Model and Compression Ratio

TL;DR

By default BigQuery bills by Logical Bytes, but for GA4 data Physical Bytes billing is anywhere from 5x to 20x cheaper because the data has a good compression ratio.

If you’re really cost concerned, go to the BigQuery dataset and switch to Physical Bytes Storage Billing.

There is no difference in query performance or cost either way.

Details

The Billing Storage Model is based on either Logical Bytes (cost per GB is lower) or Physical Bytes (cost per GB is higher). The Physical Bytes are the compressed actual disk space – the Logical Bytes are the uncompressed data size.

Whether you should choose Logical Bytes or Physical Bytes comes down to whether or not your data has a better than 2x compression ratio. This is because the Physical Bytes billing is 2x as expensive as the Logical Bytes billing per GB.

You can calculate your compression ratio by looking at the Details of your GA4 dataset in BigQuery and taking the Logical Storage and dividing by the Physical Storage size. Typically the larger your data volume the better your compression ratio. Small datasets might be 4-6x. Large datasets are commonly above 20x.

Flip back and forth in the advanced settings above to see the difference.

Long Term Storage

BigQuery automatically puts data that hasn’t been written into long term storage (cheaper cost per GB / mo.) after 90 days without writes. Reading the data doesn’t impact this.

This means that for GA4 data streams, data goes into long term storage after ~90 days because the tables are not written to retroactively (after a the first 1-3 days of “settling”)

Streaming vs. Daily-Only

There are two types of BigQuery data tables that GA4 can generate: Daily and Streaming. Daily tables include user-attribution and transferred to BQ once per day. Streaming tables get populated within 1-2 minutes of real-time.

Daily tables have 1M events max in the free version of GA4 (up to 2B in GA4 360, which starts at ~$5000 / mo.)

You can avoid the cost of GA4 360! Because Streaming tables don’t have a daily max. You pay $0.05 per GB streamed (600k – 800k events). Do the math, it is thousands of times cheaper than a GA4 360 license.

And you can recreate any data the Daily tables have from the streaming data, easy peasy.

Getting Started with DBT

The GA4 data comes in a stream and nested form – the grain of the data is events and the event parameters are in a nested field.

One of the first things you’ll want to do to make the data useful is to “denormalize” it – or reform it to be in terms of events, sessions, users, and event_products.

This will require processing data as it comes in every day and there are 100 little decisions you need to make when doing this.

We have iterated on this functionality since 2022 and have open-sourced our code to do this. Take a look at the link below.

If you aren’t familiar with DBT, now is a great time to get familiar. In short, it is technology to put data engineering code in version control and make it easy to collaborate.

Saving on Compute Costs

Must do’s to cut query costs:

- Shard your tables by date!

- Make your models incremental!

- Make sure Looker Studio date range selectors are connected to the shard field.

- Don’t use Custom Query connectors in Looker Studio (or otherwise). Connect to a materialized table instead (if possible).

- Avoid “select *” – only materialize columns you need.

- Don’t over-materialize in your scheduled processing (DBT, DataForm, etc.)

Don’t want to think about any of this nonsense? Contact us to talk about Hosted Analytics and leave it to the nerds.

Book Your Free Strategy Call

To keep you moving forward, your first call will be with an analytics engineer, not a sales rep.

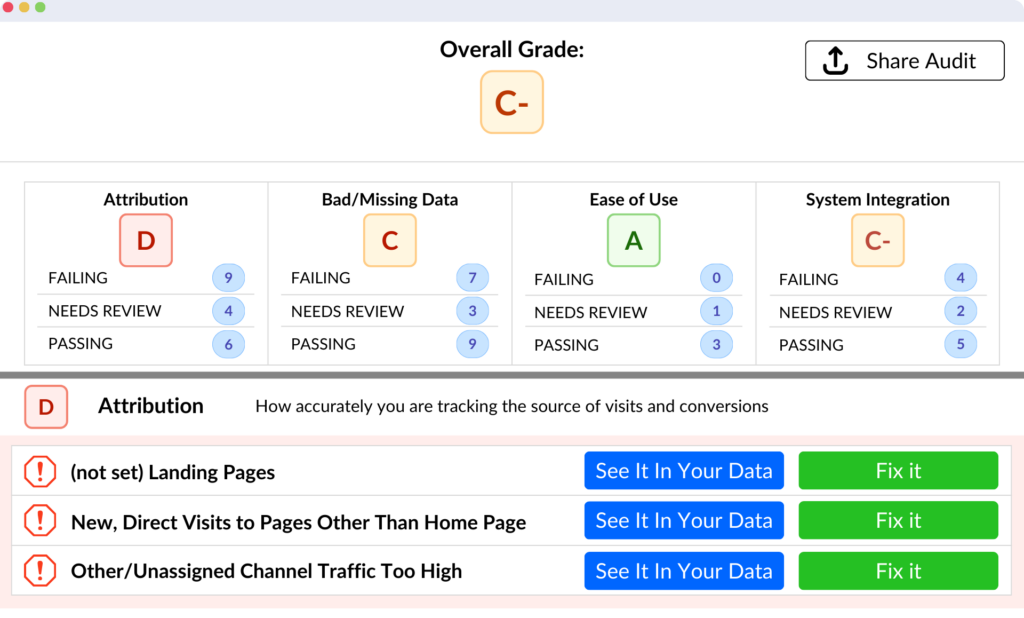

Lots of Specialties. One goal.

Insights are the north star and it takes a team to get them – from analytics/data engineers to analysts and architects.

1. Tell Us What You Need

2. We Optimize Your Analytics

3. Grow Your Market on Data You Trust

Brands That Trust Their Analytics to adMind

Measure your marketing impact

Ready to see how proper analytics can drive growth for you?

Schedule a strategy call today. Learn how a proper analytics practice can help you:

-

Maximize your tech stack.

-

Develop influential content.

-

Align your org on KPI's and metrics.

-

Focus teams on what matters.

"*" indicates required fields